Original article appears on blog from Transform with Google Cloud website.

The IceCube Neutrino Observatory is one of a growing number of science initiatives to use scalable cloud infrastructure to process massive datasets

About 4 billion years ago, a quasar was . . . having a moment.

The supermassive black hole at the heart of a galaxy known as TXS 0506+056, off the Orion constellation, had a violent hiccup and burped particles at the speed of light straight across the universe. Those subatomic particles, called neutrinos, went on an uninterrupted cosmic journey of 1,750 megaparsecs, until they passed through the ice in Antarctica on September 22, 2017. That’s when these tiny neutrinos were detected by scientists, who were then able to track them back to their source.

Neutrinos are effectively the smallest known things in the universe, roughly 1/10-31 the size of a snowflake. With so little mass and no electric charge, neutrinos are of special interest to astrophysicists because they can pass through the universe without being acted upon by the various cosmic forces such as magnetic fields that would change the trajectory of other particles like protons or electrons. Neutrinos can thus theoretically be tracked back to their point of origin, typically some kind of powerful celestial event like supernovae or quasars at the heart of an active galactic nucleus.

That is, if they can be detected at all.

Due to neutrinos’ almost infinitesimal size and lack of electric charge, they are very difficult to detect. And yet, neutrinos are possibly the most abundant particle in the universe. They may be the key to understanding advanced astrophysical concepts like exploding stars, gamma-ray bursts, and dark matter and dark energy.

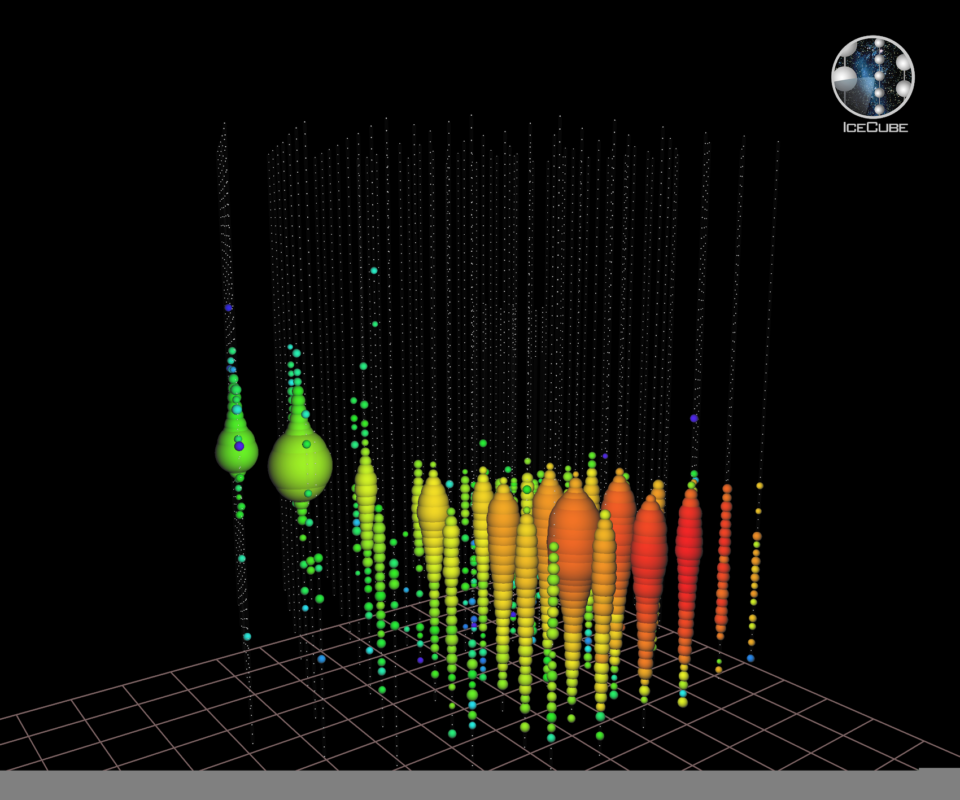

The neutrinos that traveled unimpeded through the galaxy — including that passage through Antarctic ice in 2017 — were detected by scientists working on the IceCube Neutrino Observatory. Sensors at IceCube can detect neutrinos when they pass through the solid ice of Antarctica, but only indirectly when they interact with other particles on their way through.

IceCube generates a massive amount of data on a daily basis, thus making its compute needs of great importance in the search for one of the universe’s most mystifying particles. Cloud computing, with the power of tens of thousands of GPU processors behind it, can help IceCube and other particle detectors around the world achieve their mission faster and more efficiently.

How neutrinos are detected at IceCube

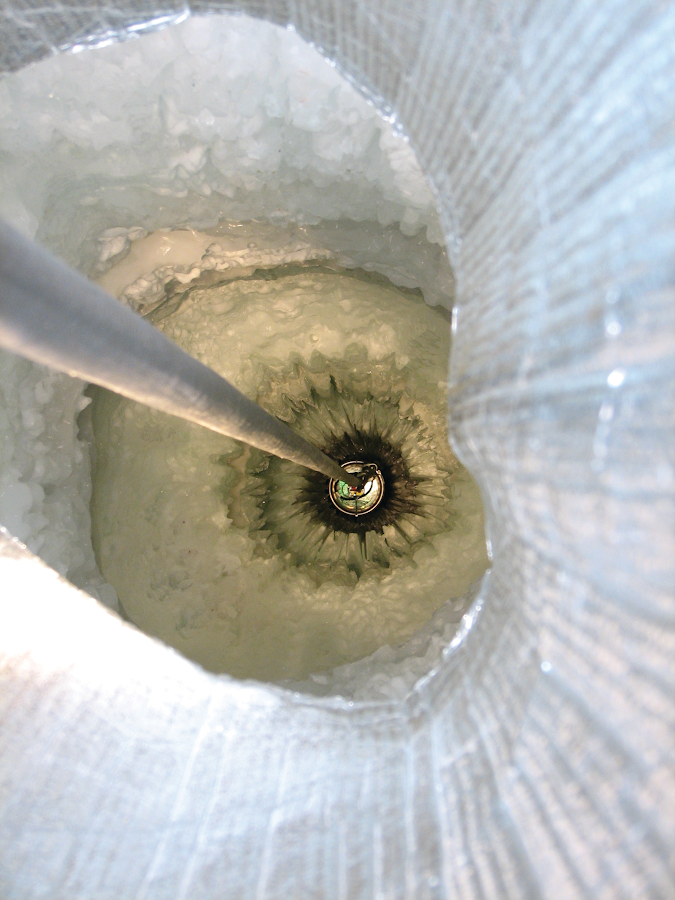

IceCube was built in Antarctica because of the nature of its ice. The ice at the South Pole is compact and clear, with any air bubbles that might interfere with measurement forced out of its mass. The ice also shields the environment from radiation from Earth’s surface.

“In order to detect the light given off by the secondary particles produced by neutrino interactions, we needed a large volume of transparent material,” the IceCube team states on its website. “That basically meant water or ice. Because these interactions are rare and produce light that can extend over a kilometer, IceCube requires a lot of ice atoms to capture one event. The South Pole is the one place on Earth that holds such large quantities of clear, pure, and stable ice and has the infrastructure to support scientific research.”

IceCube is located at the Amundsen–Scott South Pole Station in Antarctica. Conceived of and developed by research scientists at the University of Wisconsin-Madison, IceCube was constructed between 2005 and 2010. The initial cost was $279 million, with most of the funding coming from the National Science Foundation.

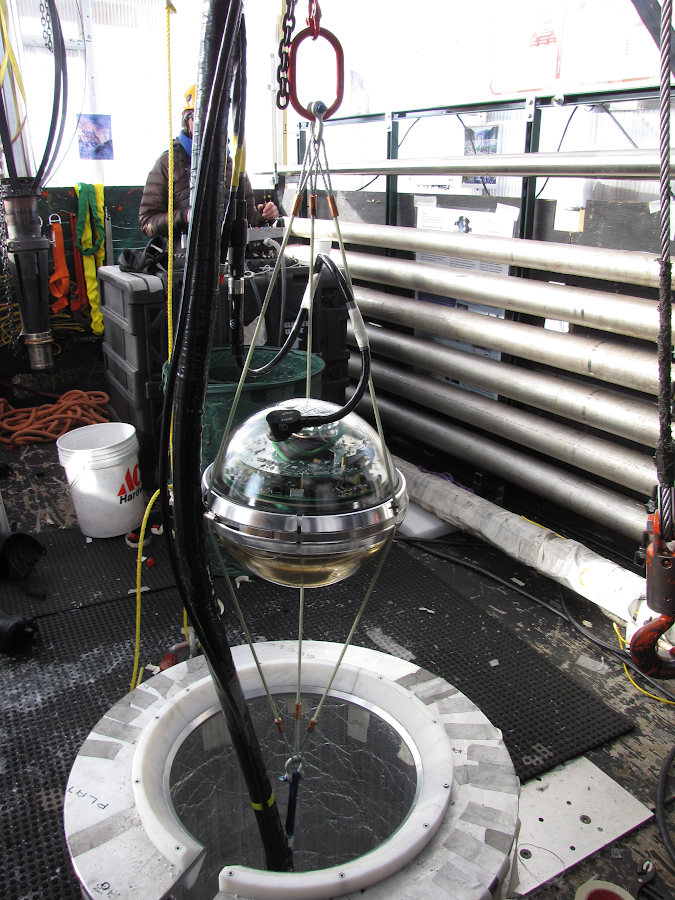

Embedded in the ice about 1,500 meters deep are around 5,000 sensors called digital optical modules (DOMs) spread out over an area of a square kilometer. The DOMs detect neutrinos indirectly, as they interact with secondary particles in the ice and produce a tell-tale burst of blue light known as Cherenkov light.

“We will start seeing into the guts of the most energetic objects in the universe,” said John Learned, a professor at the University of Hawaii, to The New York Times at the time of the discovery in 2017.

Beyond the compute power required to study those energetic objects, the fact that researchers as far away as Madison and Honolulu are able to access data on a real-time basis from the base of the planet is another testament to the power and value of the cloud.

Supercharging neutrino detection and simulation with GPUs

Most of IceCube’s computing needs are handled by the Open Science Grid, an academic cloud infrastructure shared by institutions across the world. The most computationally expensive aspect of IceCube’s operation is the “ray tracing” code that simulates the trajectory of a detected neutrino and tracks it back to its cosmic source. This work is best done by graphic processing units (GPUs) because they are suited towards crunching massive amounts of data, especially for machine learning algorithms and image processing.

The problem was that the Open Science Grid does not have much in terms of GPU capacity within its network. Thus, ray-tracing simulations could take a long time to process. IceCube researchers turned to Google Cloud and its ability to run GPUs and utilize Google Kubernetes Engine (GKE) to help turbocharge their computing resources.

“The most computationally intensive part of the IceCube simulation workflow is ray-tracing or graphics rendering that simulates the physical behavior of light, and that code can greatly benefit from running on GPUs on GKE,” said Benedikt Riedel, global computing coordinator of the IceCube Neutrino Observatory and computing manager at the Wisconsin IceCube Particle Astrophysics Center at the University of Wisconsin–Madison.

Those benefits are becoming an increasingly common phenomenon, as research scientists around the world turn more and more to the hyperscale public clouds to help process massive datasets. For instance, The Asteroid Institute recently combed years of old data to help find new asteroids in the solar system by running a new algorithm in the cloud.

“With the help of a public cloud, researchers can quickly get access to large computational resources when they need them,” Riedel said. “Researchers, developers and infrastructure teams can run the workloads without having to worry about underlying infrastructure, portability, compatibility, load balancing and scalability issues.”

Go deeper on neutrino discovery

Want to explore the technical aspects of how the IceCube observatory uses GPUs and Google Kubernetes Engine to detect Neutrinos? Read more on the Google Cloud Blog.